AI Engineer & Researcher at Spartan | Expert in AI, Data Science & Machine Learning

June 11, 2025

A Glimpse into AI’s Past: Yann LeCun’s LeNet-1 Demo

![Convolutional Network Demo from 1989 [restored version]](https://i.ytimg.com/vi/H0oEr40YhrQ/hqdefault.jpg) Convolutional Network Demo from 1989 [restored version]

Convolutional Network Demo from 1989 [restored version]

Last week, I shared a restored 1989 video of Yann LeCun demoing LeNet-1, the first convolutional neural network (CNN) to recognize handwritten digits in real time. The post struck a chord, racking up over 450,000 impressions and sparking hundreds of comments from AI enthusiasts, engineers, and researchers. It’s no surprise why - this demo is a time capsule of innovation, showcasing the roots of modern deep learning.

Hao Hoang on LinkedIn

𝗬𝗮𝗻𝗻 𝗟𝗲𝗖𝘂𝗻 𝗿𝘂𝗻𝗻𝗶𝗻𝗴 𝗮 𝗿𝗲𝗮𝗹-𝘁𝗶𝗺𝗲 𝗖𝗼𝗻𝘃𝗼𝗹𝘂𝘁𝗶𝗼𝗻𝗮𝗹 𝗡𝗲𝘂𝗿𝗮𝗹 𝗡𝗲𝘁𝘄𝗼𝗿𝗸 𝗱𝗲𝗺𝗼… 𝗶𝗻 1989. Long before GPUs, PyTorch, or TensorFlow existed, this demo recognized handwritten digits in real time using a DSP card and a 486 PC. This was LeNet-1. It had just ~9K parameters. It changed everything. Today’s AI is built on foundations like this. Watch it, and remember how far we’ve come. #AI #DeepLearning #NeuralNetworks #ComputerVision #HistoryOfAI #YannLeCun

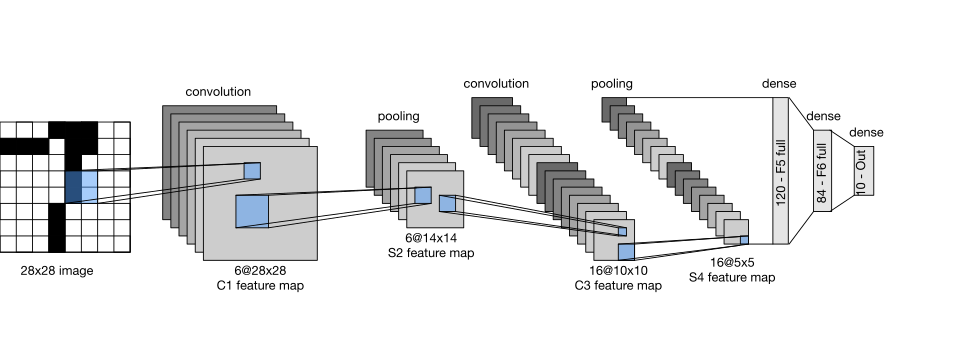

Running on a 486 PC with an AT&T DSP32C chip (capable of a whopping 12.5 million multiply-accumulate operations per second), LeNet-1 had just 9,760 parameters and used a lean architecture: two 5x5 convolutional layers with stride 2, followed by two fully connected layers. No pooling layers (too expensive!), no batch norm, no dropout - just raw ingenuity. Yet, it worked, recognizing digits with speed and accuracy that were groundbreaking for the era.

The irony? The video was restored using a modern ConvNet-based enhancement tool - a poetic nod to how far we’ve come. As I noted in the comments, “A ConvNet demo from 1989, enhanced by a ConvNet from 2024. Full circle.” 😊

Why LeNet-1 Matters Today

LeNet-1 wasn’t just a cool demo; it introduced concepts that remain the backbone of computer vision:

- Convolutional Layers with Weight Sharing: Reduced parameters while preserving spatial relationships.

- Hierarchical Feature Learning: From edges to complex patterns, learned directly from pixels.

- End-to-End Learning: No hand-crafted features - just raw data to predictions.

These ideas, born in an era of limited compute, remind us that innovation isn’t about having the biggest hardware but asking the right questions. As one commenter, Abhishek Gupta, put it: “It’s proof that groundbreaking innovation doesn’t need massive compute, just relentless vision.”

In my recent TensorFlow/Keras experiments (shared in my notebook), LeNet-1 and its successors still achieve impressive accuracy on MNIST, proving their enduring relevance. But the real lesson is in their simplicity. With today’s GPUs offering 10 million times the compute power of 1990s DSPs, are we over-engineering solutions? As Andrea Amedeo Serravalle asked in the comments, “What if modern AI is over-engineered?” It’s a question worth pondering.

The Community Speaks: Your Thoughts on AI’s Evolution

The response to the LeNet-1 post was incredible, with insights that deepened the conversation:

- Syed Muqtasid Ali asked if we’re missing opportunities by focusing on bigger models instead of efficiency and novel architectures. I agree - efficiency is key, especially as we scale AI for real-world applications.

- Vu Hung Nguyen shared a GitHub repo diving into LeNet’s implementation in TensorFlow/Keras, sparking a discussion on parameter pruning and generalization. Fewer parameters can mean less memorization, more robustness.

- Firas Belhiba connected LeNet to the Bellman equation (1953), reminding us how deep learning builds on decades-old foundations.

- Vamsi Chittoor humorously noted that a 486 PC was “flexing on today’s researchers who need a small country’s power grid.” 😄 Efficiency, anyone?

The comments highlighted a shared appreciation for AI’s pioneers and a curiosity about where we’re headed. So, let’s keep the conversation going: What’s one lesson from AI’s past that you think we should apply today? Drop your thoughts in the comments or DM me!

Looking Ahead: Efficiency and Innovation

LeNet-1’s legacy challenges us to balance power with purpose. As we push for larger models, let’s not forget the elegance of simplicity. My current work at Spartan focuses on optimizing AI for real-world impact - think lightweight models for edge devices or pruning techniques to boost efficiency without sacrificing accuracy. I’ll share more on this in my next newsletter, including a code walkthrough of a modernized LeNet inspired by your feedback.

For now, check out the original LeNet-1 video here. Want to dive deeper? Reply with your questions, and I’ll address them in the next edition!

Stay Curious, Keep Building

Hao Hoang

#AI #DeepLearning #NeuralNetworks #ComputerVision #HistoryOfAI